The AI Risk Stack: Securing GenAI for Use in the Enterprise

Generative AI (GenAI) and Large Language Models (LLMs) are everywhere you look – overtaking seemingly every tech headline, sparking boardroom conversation, and becoming a hot topic in job market reports. Despite its evident popularity among individual users, the path to widespread adoption of Generative AI (GenAI) in the enterprise sector is met with skepticism.

A survey of 2,000 global executives conducted by Boston Consulting Group (BCG), found that more than 50% actively discourage GenAI adoption (Source). This statistic highlights a stark contrast between the enthusiasm seen at the individual level and the reservations prevailing in the corporate world.

That’s why it’s been no surprise when we talk to industry leaders and portfolio companies alike that, for the most part, any enterprise “adoption” is mainly experimentation today. From organizing hackathons to developing basic chatbot applications, organizations are in the early stages of exploring a comprehensive GenAI strategy.

This leads to a critical question: why are enterprises hesitant to embrace an era-defining technology that is capable of generating near-human-level intelligence?

While the future promise of GenAI is not doubted, the present risks it poses to enterprises today—from hallucinations to regulatory requirements—currently outweigh the potential benefits organizations might reap.

Consequently, many leaders are holding back on their GenAI plans until more effective mechanisms to control risk are widely available. Over 60% of corporate executives in a recent KPMG survey said they expect regulatory concerns to put a damper on their generative artificial intelligence technology investment plans, and 40% explicitly reported a 3-6 month pause on GenAI investments to monitor the landscape (Source).

Solving for the Risks

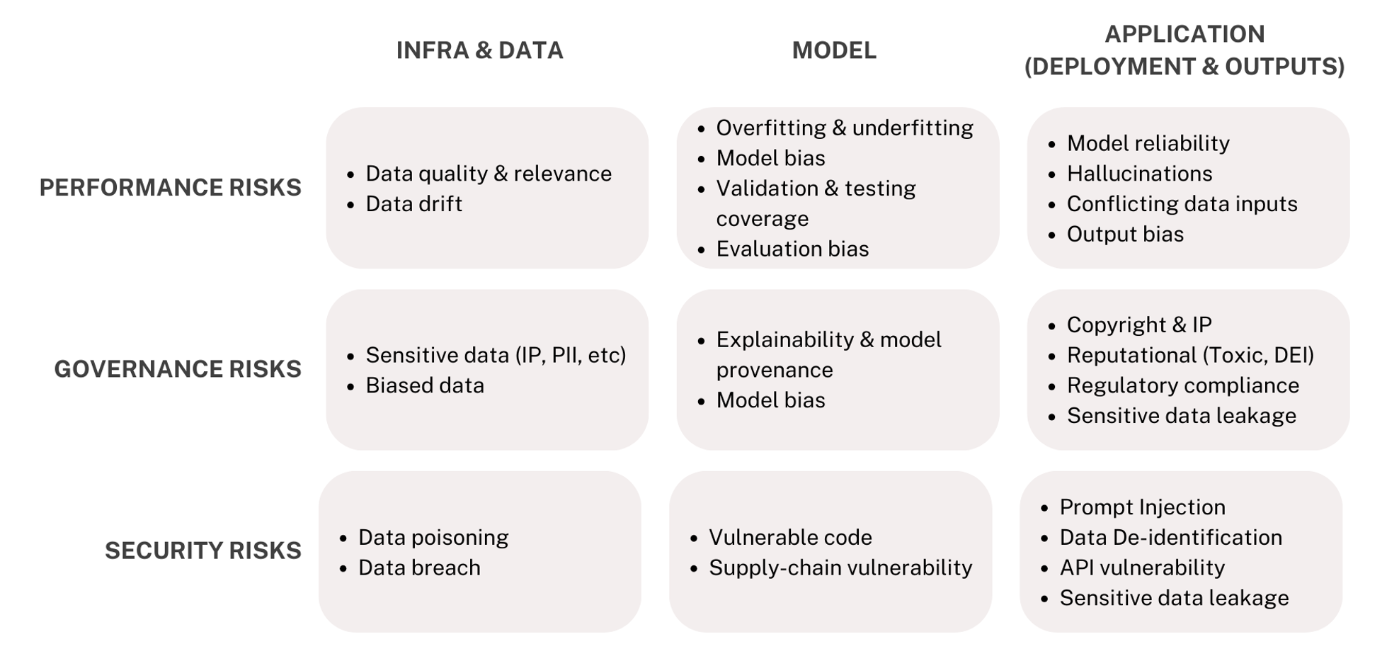

We categorize the risks faced by the enterprise into three buckets across the stack (non-exhaustive):

Performance risks primarily stem from how models are constructed and refined, significantly affecting their efficacy and accuracy.

While these risks can be introduced at any stage of implementation, they are a direct result of how data is incorporated into models. This ranges from the actual composition of a dataset to how data is treated during model calculation to how models are evaluated and updated based on new data. For instance, Retrieval Augmented Generation, or RAG, is a framework that enables real-time data retrieval and controls the data provided to an LLM at query time, helping to mitigate hallucinations and provide more contextually accurate responses.

Given the rapid pace at which techniques like RAG, fine-tuning, etc, are being introduced and evolving, there’s an air of uncertainty about how emerging standalone solutions to address this risk layer will withstand in the ever-changing market. Model providers are ideally positioned to tackle a range of at least a handful of the current issues. For instance, Grok, x’s new proprietary model, despite its limitations, is emerging as a formidable competitor to OpenAI due to its advantage in real-time data retrieval over static intelligence.

However, we do believe new tooling that can deliver end-to-end visibility or last-mile support (testing, evaluation, performance monitoring), especially across multi-model environments, will gain traction. These will likely emerge as developer tools and ML/LLM Ops platforms sold to technical users.

Governance risks encompass vulnerabilities that pose a threat of non-compliance with both internal policies and external regulatory frameworks.

While a slew of these risks are already well-defined, such as those around PII or biased data, there are also a number of new considerations that are introduced with generative capabilities, such as IP infringement or toxic text outputs.

Adding to that list is the growing tapestry of proposed regulations, increasing the complexity of compliant AI adoption. While the EU AI act is the furthest along and most well-known proposal, over 58 countries have introduced AI regulations of their own – all of which vary dramatically in their approach, penalties, and coverage.

Because of this, effective governance hinges on a dual approach: gaining a comprehensive understanding of GenAI usage throughout your environment and having well-defined internal and external policies to map and mitigate associated risks. This means knowing where employees have GenAI licenses, what internally developed projects and tools are incorporating LLMs, and how third-party vendors and applications are introducing GenAI.

This is a tall order in itself today. Putting aside the lack of clarity on the regulatory front, current tools primarily rely on manual user input for AI usage tracking. Delivering more sophisticated capabilities like vendor scanning and model detection to deliver automated assessments, proactive alerts, and audit logs will be a compelling differentiator for emerging solutions.

As tools advance in this category, we expect many to converge with the security stack by focusing on building smart integrations. Through this, AI governance platforms can both ingest and feed data from tools across categories like data governance, privacy, and access control to better define configurations and inform decision-making. Building this connectivity will be key to enabling integrated risk mitigation at the enterprise level.

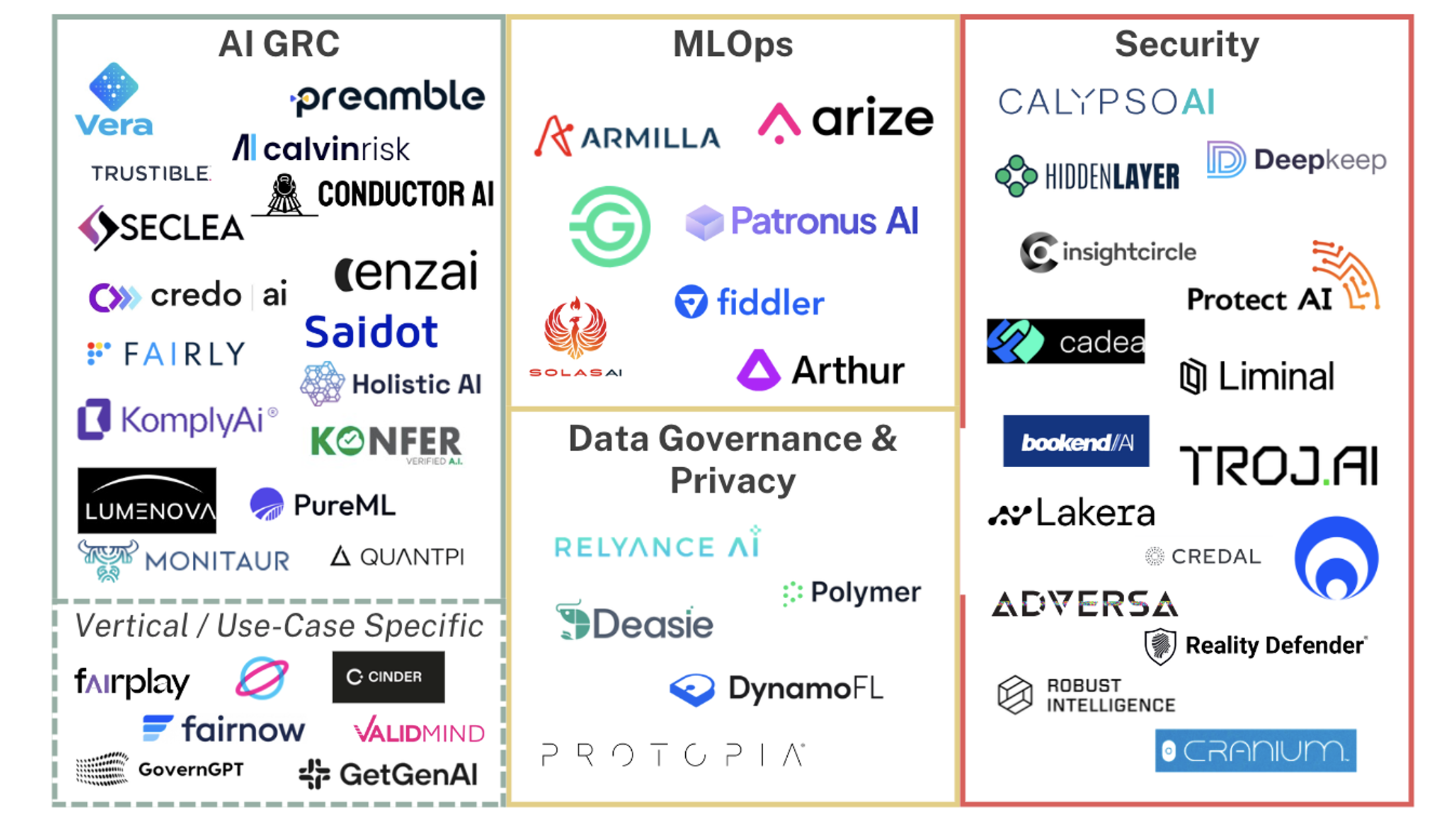

The other trend we’re bullish on is the “verticalization” of solutions to meet the unique demands of high-scrutiny sectors or use cases. For instance, FairNow addresses model bias starting with HR utility to tackle acts like the NY Bias Law, GetGen AI and GovernGPT focus on creating and moderating compliant content, and Lega helps configure guardrails specifically for law firms.

Security risks involve external threats that malicious parties may exploit. These risks can compromise model performance and lead to unauthorized access to sensitive information.

Like in governance, GenAI not only escalates known security issues such as data leakage but also introduces new threat vectors, including sophisticated manipulation tactics like prompt injection.

Because of this, we expect the space to unfold in a similar manner. The first wave of AI security solutions, which have been in the market for several years and often are not LLM specific, focus on horizontal measures like asset detection, vulnerability prioritization, and remediation strategies.

The next wave – which is nascent but emerging – is where we believe diverse, LLM-specific point solutions will arise to target very specific threat vectors or components of the tech stack. For example, Calypso AI emphasizes data security, Bookend AI provides a secure development platform, while solutions like Hidden Layer and Lakera AI adopt an endpoint-driven strategy. Reality Defender, on the other hand, focuses on threats associated with the actual outputs, providing deepfake detection.

Overall, while we fully believe the AI-security market, and specifically the LLM-security market, is poised for continued momentum, it’s in its early stages. The evolution of this market will parallel the maturation of GenAI usage within enterprises and across certain industries.

While the previous category of AI governance and risk assessment are table stakes first steps for adopting GenAI, cybersecurity solutions can require deeper technical integrations and are often tailored for specific functions. For this reason, we believe enterprises will need clarity and surety around how they will implement GenAI before investing the financial and human capital required by many security solutions.

An Evolving Landscape of Solutions

While the below market map is by no means exhaustive, it showcases the remarkable number of startups, differing approaches, and rapid pace of innovation in GenAI risk mitigation, underscoring its critical priority. In fact, it’s likely that in the time between writing and publishing this post, we’ll have seen a number of new solutions whose logos we haven’t added here.

While questions remain, some facts are clear: this sector is experiencing unprecedented demand, offering numerous opportunities for potential market leaders and representing a multi-billion dollar investment frontier.

As always, we welcome collaboration and diverse perspectives. If you’re actively involved in shaping this field as a builder, thought leader, investor, or buyer, please reach out to Saaya ([email protected]) and Saurabh ([email protected]).

Front Page

Front Page