Securing GenAI – From Risk to Resilience

In a previous post, we discussed the AI Risk Stack, highlighting various risk categories and double–clicked into AI Governance and Compliance. In this post, we’re exploring new security attack vectors exposed by GenAI , examining market evolution, and offering insights on how organizations can prepare themselves for GenAI adoption.

Understanding Generative AI Security Risks

GenAI has swiftly become an essential part of modern business operations, integrating into applications like chatbots, content generation, workflow management, and beyond. This integration demands a sophisticated infrastructure stack to support and optimize these AI systems. Key components of this stack include model training, Retrieval-Augmented Generation (RAG), vector databases, and various automation and observability tools.

As GenAI becomes ubiquitous, it introduces novel attack vectors previously non-existent in traditional applications. These include prompt injection attacks, adversarial prompting, data poisoning, deepfake creation, data leaks, supply chain attacks, and more. These threats exploit the unique features of Gen AI, such as its ability to generate highly convincing content, its reliance on extensive datasets, and transformer neural networks. The expanded landscape of attack vectors is:

Adversarial Attacks:

- Prompt Injection involves inserting hidden instructions or context within an application’s conversational or system prompt interfaces or in the generated outputs. This can lead to unauthorized actions, data leaks, and data exfiltration.

- Poisoning Attacks: Attackers can modify pre-trained models to generate false facts or malicious outputs. Think of it as someone tampering with a recipe book so that all recipes produce disastrous results. A notable example is PoisonGPT, where a compromised AI model was uploaded to a public platform, intentionally spreading misinformation.

Digital Supply Chain Attacks:

- Component Vulnerabilities: GenAI components, often used as microservices in popular business applications, can be targeted through machine learning (ML) attack techniques, such as phishing, malware, and training data manipulation. These vulnerabilities can be critical and necessitate robust patch management to prevent exploitation.

Data Privacy and Protection:

- Hallucinations and Misinformation: GenAI models can produce outputs that are inaccurate, illegal, or infringe on copyrights. These “hallucinations” can compromise enterprise decision-making and potentially harm a company’s reputation.

- Privacy Violations: Gen AI’s capability to create and personalize content raises significant privacy and data security concerns. For example, AI-generated images or videos of people without their consent can violate privacy norms and regulations, leading to serious implications.

A report by Menlo Security revealed that within a 30-day period, 55% of data loss protection events involved users attempting to input personally identifiable data into GenAI sites. This included confidential documentation, which made up 40% of these attempts. GenAI systems, such as ChatGPT and Gemini, are noted for their potential to leak proprietary data when responding to prompts from users outside the organization. This risk is akin to storing sensitive data on a file-sharing platform without proper security controls.

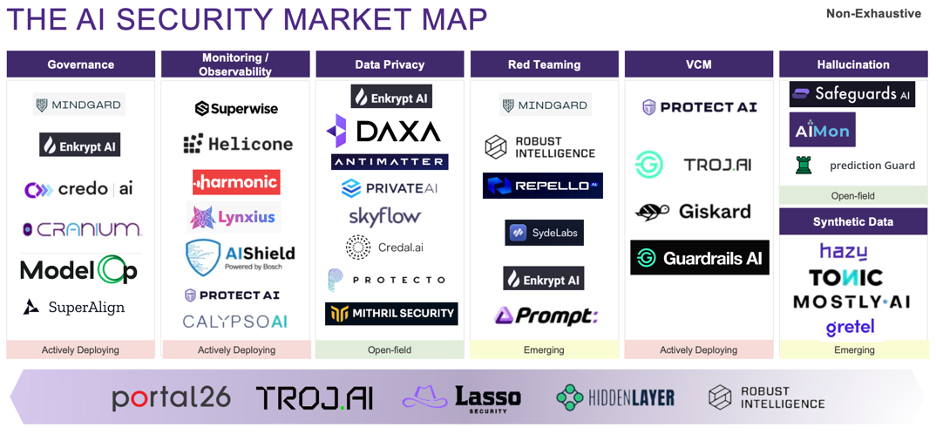

These new attack vectors require new-age solutions from modern companies. While tools like Mindgard and Superwise help monitor and govern the model supply chain, solutions from Antimatter.io and Protopia AI are crucial for ensuring proper access configurations for large language model data and maintaining robust data security.

Start-ups Leading the Way

GenAI surged into the spotlight in late 2022, but securing AI solutions has been a concern since the 2010s. For years, we’ve been working on managing machine learning models, creating synthetic data, and running red team exercises to find weaknesses. However, the advent of GenAI has introduced new threat vectors, pushing us to innovate more.

These emerging challenges are already making waves in the cybersecurity market, impacting startups, major vendors, and systems integrators. The latest advancements were prominently featured at the 2024 RSA Conference’s Innovation Sandbox and the Black Hat Startup Spotlight.

Observability and Governance remain paramount concerns for large enterprises when adopting GenAI. This isn’t just about monitoring and governing the use of GenAI within organizations; it also extends to the GenAI-based features and products these organizations create. Start-ups like Helicone and Lasso Security have tapped into this critical need. SuperAlign helps govern all models and features in use, tracking compliance and conducting risk assessments. Lynxius addresses the evaluation and monitoring of model performance on key parameters such as model drift and hallucinations.

As discussed in our previous blog, governance and observability serve as the stepping stone for organizations to understand what’s happening before implementing additional software to mitigate risks. Harmonic Security, a finalist for the RSAC Innovation Sandbox contest, helps organizations track GenAI adoption and identify Shadow AI (unauthorized GenAI app usage) while preventing sensitive data leakage through a pre-trained “Data-Protection LLM.” Taking a new approach, they engage directly with the end customer – the employee – to remediate rather than firing an alert with the security team.

Privacy violations, data leaks, and model poisoning are becoming increasingly common as GenAI itself is used to launch such attacks. This is an ever-expanding category, with start-ups adopting various approaches to tackle these challenges. Enkrypt AI ensures that personally identifiable information (PII) is redacted before any prompt is sent to an LLM, while DAXA enables business-aware policy guardrails on the LLM responses. Antimatter, a sensitive data platform, manages LLM Data by ensuring proper access controls, encryption, classification, and inventory management. Protecto secures retrieval-augmented generation (RAG) pipelines, implements role-based access control (RBAC), and helps redact PII. Each start-up offers a unique solution, making this category one to watch closely.

Synthetic Data – For industries with strict privacy requirements, synthetic data is a game-changer. Start-ups like Tonic and Gretel make it possible to train models using synthetic data instead of your own proprietary data. Tonic helps de-identify datasets, transforming production data to make it fit for training while maintaining a pipeline to keep the synthetic data current. Gretel provides API support, allowing you to not only train GenAI models with “fake data” but also validate the use cases with quality and privacy scores.

Red Teaming – The Biden Administration’s AI executive order now requires companies developing foundation models to share their red teaming results with the government. Similarly, the EU AI Act mandates adversarial testing for these models. Anthropic recently released a proposal calling for standardizing AI Red-Teaming efforts. Despite the complexity and multiple interpretations, start-ups like Mindgard, Prompt Security, Repello AI, and SydeLabs are helping organizations overcome this hurdle. Mindgard has created an AI attack library, providing continuous automated red teaming for AI features. Meanwhile, Repello AI, a stealth start-up, offers red teaming for a suite of LLMs and vector databases.

Hallucinations are here to stay, at least for now. Companies like AIMon and Prediction Guard are making big strides in tackling this issue. AIMon boasts a solution that is ten times cheaper while tackling the biggest challenge here – latency. Prediction Guard, on the other hand, goes beyond data security and privacy by validating outputs to prevent toxic responses.

Start-ups often begin by solving a specific problem but quickly expand their scope to tackle additional challenges, often focusing on Monitoring and Observability. Portal26, Lasso Security, Troj.AI, Hidden Layer, and Robust Intelligence have proven their worth by broadening their feature sets, touching upon Governance, Observability, various forms of data protection, red teaming, and vulnerability management. Collectively, they have raised $100M+ in funding.

Adoption Pathways

To effectively adopt GenAI security solutions from startups while mitigating associated risks, businesses should implement robust observability and governance frameworks. Here are some key strategies:

- Start with Pilot Programs: Test AI solutions in controlled environments to understand their capabilities and limitations.

- Conduct Compatibility Assessments: Address technical challenges before full-scale deployment to ensure smooth integration.

- Implement Robust Access Controls: Limit who can access AI systems and data security.

- Conducting Adversarial Testing: Regularly test AI systems for vulnerabilities to prevent attacks.

- Develop Comprehensive Incident Response Plans: Be prepared to respond quickly to any security breaches.

Observability is crucial. Businesses need comprehensive monitoring and logging of AI system activities to detect anomalies and potential security incidents in real time. Continuous monitoring of AI models helps identify drifts, biases, and other issues that could compromise security and reliability.

Establishing AI governance frameworks that define roles, responsibilities, and policies ensures compliance with regulations and ethical guidelines. Ensuring transparency and explainability of AI models builds trust and accountability.

By following these pathways, organizations can harness GenAI’s benefits while proactively managing risks and ensuring compliance with relevant regulations and ethical standards.

Jump Capital has a long history of investing in cybersecurity companies across the stack, such as Siemplify, Flashpoint, Ironscales, Enso Security, Cloud Conformity, and NowSecure. We are excited to partner with cybersecurity builders who are working to make AI adoption easier for organizations. If you are building in this space, we’d love to hear from you.

Front Page

Front Page